Does Sbac Give Easy Test to Dumb People

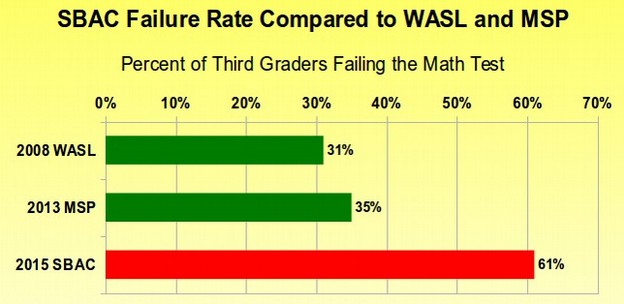

In this article, I will explain why I oppose toxic and unfair high stakes bubble tests such as the SBAC test and why I will end them a graduation requirement. I am the only candidate for State Superintendent who has a firm plan to end the high stakes tests as a graduation requirement in our state. The SBAC test is an unfair bubble test designed to label as failures more than half of the students who take the test. Yet two of my opponents (Reykdal and Seaquist) have voted to make the SBAC test a graduation requirement in Washington State!

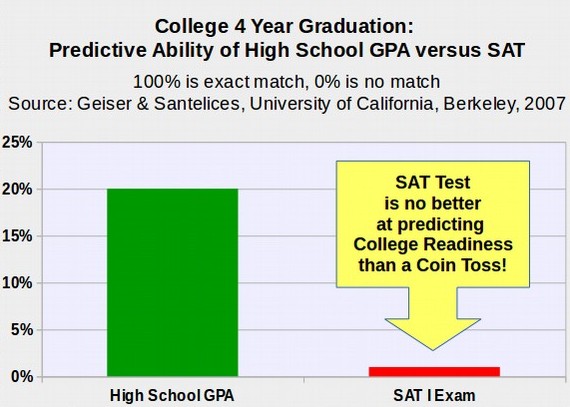

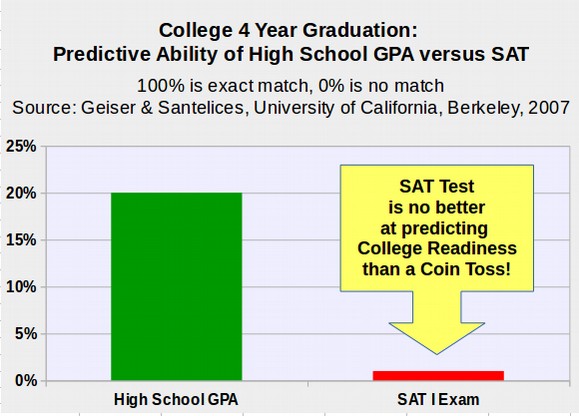

The makers of the SBAC test falsely claim that it can predict if students are "college ready." In fact, no bubble test has ever been able to predict if a student is college ready. The only predictor of college readiness is a students high school GPA.

In short, the SBAC test not only harms students, it is worthless as an assessment tool.

In 2015, 62,000 parents in Washington opted their children out of the SBAC test - resulting in Washington having a "participation rate" of only 91%.

Since federal law requires a rate of at least 95%, Washington received a letter from the Department of Education threatening to reduce federal funding unless school districts completed an enforcement plan. In fact, no school district has every lost federal funds due to a failure to force students to take high stakes tests. In December 2015, Congress passed a revision of the ESSA prohibits the Department of Education from threatening states with loss of funds.

Federal Department of Education Fails to Comply with New ESSA

At a Senate hearing on April 12 2016, Senator Lamar Alexander, one of the prime authors of the new ESSA scolded the Department of Education for continuing to threaten states with loss of federal funds and violating several key provisions of the new ESSA. Senator Alexander stated: "Your department proposed a rule that would do exactly what the law says it shall not do... Not only is what you are doing against the law, but they way you are doing it is against another provision of the law... If the department (of Education) tries to force states to follow regulations that violate the law, I'll tell them to take you to court."

http://www.help.senate.gov/chair/newsroom/press/chairman-alexander-already-disturbing-evidence-that-education-department-is-ignoring-the-new-law

Washington State OSPI Fails to Comply with New ESSA

Sadly, on that same day, April 12, 2016, the Superintendent of Public Instruction violated the new ESSA by sending letters to about100 school districts in threatening them with a loss of federal funds unless they submit an enforcement plan.

What this letter completely ignored, besides ignoring the new federal law, is that parents and students at many schools are completely against the SBAC test. In Seattle, at Nathan Hale High School, 99% of students opted out. At Garfield High School, 98% of students opted out. At Ballard High School, 97% of students opted out. At Ingraham High School, 95% of students opted out. It does not matter what kind of plan the Seattle School District comes up with. Parents and students are simply going to keep opting out because the problem is not the students who are opting out - the problem is that the SBAC test itself is unfair and harmful and parents know it .

Candidates for Superintendent asked to respond to the OSPI Threat

On April 24, 2016,Carolyn Leith, a leader of a Parents and Students Rights group, Parents Across America, sent an email to all six candidates for Superintendent of Public Instruction asking us to make a statement on this shocking threat by OSPI. She asked that our responses be limited to 500 words. However, this issue cannot be properly answered in only 500 words. I have therefore published this more detailed statement on our campaign website.

As one of the six candidates, I want to begin by pointing out that for years I have been a leader in Washington state opposing the unfair and harmful SBAC test. I have written dozens of reports explaining why the SBAC test harms children. I have also published a book called Weapons of Mass Deception summarizing how the SBAC test harms children. You can read this book here: https://weaponsofmassdeception.org/

In 2015, I started the website Opt Out Washington to give parents more information on why and how to opt their children out of the unfair SBAC test. More than 50,000 Washington parents have downloaded our opt out form. Here is a link to this website: http://optoutwashington.org/

By contrast, in June 2013, two other candidates, Larry Seaquist and Chris Reykdal, voted for House Bill 1450 - the bill that forced the unfair SBAC test on students here in Washington state.

http://app.leg.wa.gov/billinfo/summary.aspx?bill=1450&year=2013

Since 2013, Chris Reykdal has been the main cheerleader for the SBAC test in Olympia. In 2015 and 2016, Reykdal sponsored House Bill 2214 - a bill to accelerate the transition to the SBAC test from the Class of 2019 to the Class of 2017.

http://app.leg.wa.gov/billinfo/summary.aspx?bill=2214&year=2015

Reykdal claims that his bill "protects the rights of parents to opt their child out of the SBAC test." This is not true. In fact, Section 102 states that high school Juniors who opt out will be punished by having a zero score placed in their permanent record. They will also be required to take an additional course during their Senior year that is " at a higher course level than the student's most recent coursework in the content area in which the student received a passing grade of C or higher." So if a student completes Algebra 2 during their Junior year but either opts out of the SBAC math test or takes it and fails, they will be required to complete a Precalculus course during their Senior year in order to graduate. Reykdal's draconian bill would make Washington one of the only states in the nation that would punish opt out students by placing them at risk of not graduating from high school.

To give you an idea of how many school districts were threatened by OSPI, below is a list of 89 school districts that received the threatening letter from OSPI and now must force about 200 schools to draft a plan to create greater compliance with the illegal SBAC test (note that since even one school not being in compliance will lead to a letter for the whole school district, there are certainly many more school districts than these who received letters). These school districts are ranking in the order that their students opted out of the 11th grade SBAC test starting with Bainbridge Island which had one of the the highest opt out rate in the nation at 99%:

| Rank | School District | Actual per OSPI Report Card |

| 1 | Bainbridge | 99 |

| 2 | Eastmont | 98 |

| 3 | Issaquah | 97 |

| 4 | Snoqualmie Valley | 96 |

| 5 | Stanwood | 95 |

| 6 | Aberdeen | 95 |

| 7 | La Conner | 95 |

| 8 | Tenino | 95 |

| 9 | Hoquiam | 95 |

| 10 | Kennewick | 95 |

| 11 | Toppenish | 95 |

| 12 | Snohomish | 87 |

| 13 | Lopez | 87 |

| 14 | Enumclaw | 86 |

| 15 | Orcas Island | 86 |

| 16 | Edmonds | 85 |

| 17 | Mercer Island | 85 |

| 18 | Mukilteo | 84 |

| 19 | Richland | 84 |

| 20 | Kent | 82 |

| 21 | Seattle | 81 |

| 22 | Lake Washington | 80 |

| 23 | North Kitsap | 80 |

| 24 | Battle Ground | 77 |

| 25 | Ellensburg | 76 |

| 26 | Peninsula | 75 |

| 27 | Cle Elum | 75 |

| 28 | Port Townsend | 73 |

| 29 | Burlington | 72 |

| 30 | Central Valley | 71 |

| 31 | Federal Way | 71 |

| 32 | Olympia | 70 |

| 33 | Highline | 70 |

| 34 | Bellingham | 68 |

| 35 | Port Angeles | 68 |

| 36 | Omak | 68 |

| 37 | Stevenson | 67 |

| 38 | Tahoma | 65 |

| 39 | Central Kitsap | 64 |

| 40 | Tacoma | 63 |

| 41 | Tumwater | 61 |

| 42 | La Center | 60 |

| 43 | Lynden | 60 |

| 44 | Northshore | 60 |

| 45 | Marysville | 57 |

| 46 | Monroe | 53 |

| 47 | Everett | 52 |

| 48 | Sumner | 52 |

| 49 | Bremerton | 51 |

| 50 | Cape Flattery | 50 |

| 51 | Mead | 50 |

| 52 | Wenatchee | 49 |

| 53 | North Thurston | 49 |

| 54 | Chehalis | 48 |

| 55 | Auburn | 47 |

| 56 | Walla Walla | 47 |

| 57 | San Juan | 46 |

| 58 | Shoreline | 44 |

| 59 | Chimacum | 40 |

| 60 | Vashon Island | 40 |

| 61 | Camas | 39 |

| 62 | Evergreen | 39 |

| 63 | Blaine | 39 |

| 64 | Renton | 39 |

| 65 | Spokane | 38 |

| 66 | Pasco | 38 |

| 67 | Yakima | 36 |

| 68 | Ferndale | 36 |

| 69 | Clover Park | 35 |

| 70 | East Valley | 34 |

| 71 | Quilcene | 34 |

| 72 | Sequim | 33 |

| 73 | Washugal | 33 |

| 74 | Zillah | 32 |

| 75 | Franklin Pierce | 31 |

| 76 | Naselle | 30 |

| 77 | Shelton | 30 |

| 78 | Raymond | 30 |

| 79 | North River | 27 |

| 80 | Lake Stevens | 26 |

| 81 | Hockison | 24 |

| 82 | Newport | 24 |

| 83 | Arlington | 23 |

| 84 | Vancouver | 23 |

| 85 | Mary Walker | 22 |

| 86 | Eatonville | 21 |

| 87 | Longview | 20 |

| 88 | Pullman | 20 |

| 89 | Riverview | 20 |

What School Districts should do to respond to the letter from OSPI

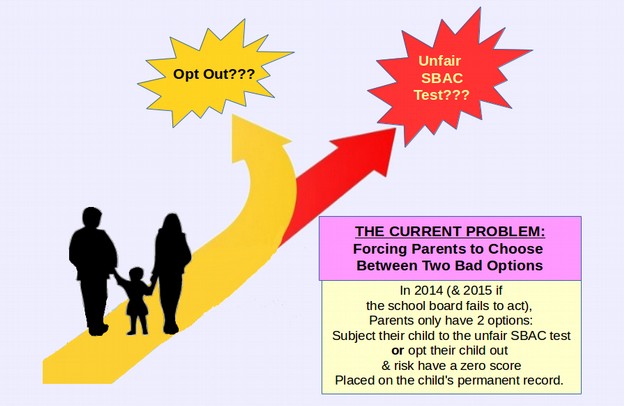

School districts are required to state why they had such a high opt out rate and what they will do to reduce the opt out rate. For the reason why the opt out rate is so high, school districts should point out that the SBAC test is an unfair, unreliable, invalid test that does not measure whether students are achieving at grade level. The SBAC test therefore does not comply with federal law. The failure of Randy Dorn and OSPI is therefore the reason there is a high opt out rate. Currently parents only have two options: force their kids to take an unfair SBAC test or Opt out of testing completely:

As for what school districts should do to reduce the opt out rate, they should apply for a local option that is fair, valid, reliable and accurately measures whether students are achieving at grade level. There are only two assessment methods that have these essential characteristics. Those options are teacher grades and the Iowa Assessment. If school districts want to use the most accurate assessment method, then I recommend using Teacher Grades - for example by reporting the student grade in their math class to meet the math assessment requirement and reporting the student grade in their English class to meet the English assessment requirement. If a school district wants to use a bubble test that is fair, valid and reliable, then they can use the Iowa Assessment.

Four Drawbacks of the SBAC Test

As noted above, the new ESSA requires that an assessment system have four essential characteristics. These are fairness, reliability, validity and the ability to assess whether or not any given student is achieving "at grade level." We will next show that the SBAC test does not meet any of these four essential characteristics.

#1 The SBAC Test is not a Fair Test

The first mandatory characteristic is fairness. Fairness means that all students have an equal opportunity to pass the test. Here we will provide four examples of how teacher grades are much fairer than the SBAC test.

# 1. 1 T he SBAC test is administered entirely online whereas the Iowa Assessments include paper and pencil assessments as an option .

Because the SBAC test is administered entirely online, the SBAC test requires students as young as 3rd grade to have typing, keyboarding and screen navigation skills. Recent research indicates that online tests discriminate against low income and minority students. Half of all low income students lack access to a computer at home while only 6% of middle to high income students lack access to a computer at home.

http://www.childtrends.org/?indicators=home-computer-access

As a consequence, low income students do not do as well as high income students on online tests. While the SBAC test does not have a paper and pencil version, the other Common Core test called PARCC has both a paper version and an online version. Education Week report ed that students who took the PARCC test online got much lower scores than those who took the test with paper and pencil . "Students who took the 2014-15 PARCC exams via computer tended to score lower than those who took the exams with paper and pencil-a revelation that prompts questions about the validity of the test results and poses potentially big problems for state and district leaders. "

" There is some evidence that, in part, the [score] differences we're seeing may be explained by students' familiarity with the computer-delivery system," said Jeffrey Nellhaus, PARCC's chief of assessment. In other words, lower income students who lack experience with computers tend to do worse than lower or higher income students who have experience with computers. The difference in scores is huge: "In December (2015), the Illinois state board of education found that 43 percent of students there who took the PARCC English/language arts exam on paper scored proficient or above, compared with 36 percent of students who took the identical exam online."

http://dianeravitch.net/2016/02/03/students-who-took-parcc-test-online-got-lower-scores/

The Illinois State Board of Education found that, across all grades, 50% of students scored proficient of the paper-based PARCC exam compared to only 32% of students who took the exam online.

http://blog.centerforpubliceducation.org/2016/02/03/parcc-test-results-lower-for-computer-based-tests/

This research indicates that because of a lack of computers in their homes, low income and minority students score about 10% to 20% lower on an online computer test than they score on an identical test that uses a paper and pencil format. A similar result occurred with two new Common Core High School Equivalency tests. The Pearson GED test is an online only test that only 30% of students can pass while the HiSET offers a paper and pencil version of their test that 60% of students can pass. Perhaps this is why, when low income struggling students are offered a choice between an online high stakes test and a paper and pencil high stakes test, over 80% choose the paper and pencil test. For example, New Hampshire offers the HiSET in both paper and online versions. In 2015, 85% of students chose to take the paper version of the HiSET test.

http://restoregedfairness.org/comparing-the-missouri-hiset-pass-rate-to-the-washington-pearson-ged-pass-rate

Therefore the fact that the SBAC test is only available in an online version is unfair to low income students who lack experience with computers in their home.

#1.2 The SBAC Test ignores high class sizes and incorrectly assumes that all students have had a fair opportunity to learn the skills covered on the test.

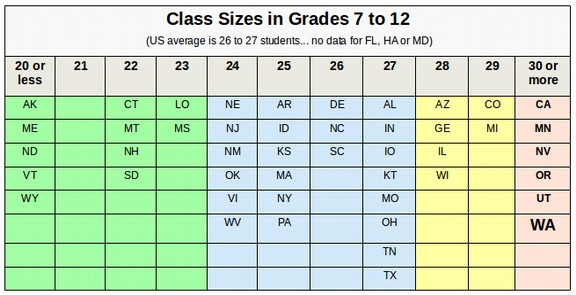

The SBAC test assumes that our schools are fully funded and that every student has a fully qualified teacher and small class sizes. Unfortunately, this is not currently the case in Washington state. Despite having one of the top ten economies in the nation, Washington state is 47th in the nation in school funding as a percent of income. As a result, Washington state has among the highest class sizes in the nation.

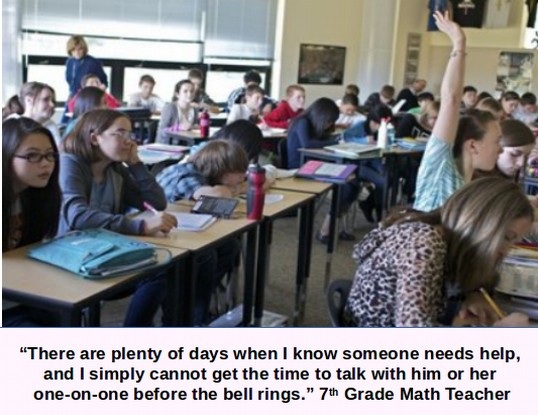

High class sizes mean not only that struggling students do not get the help they need, but that teachers are also placed under a great deal of stress. As just one example, the SBAC 11th grade math test includes Algebra II problems. My daughter's Algebra II teacher had 5 sections of Algebra II students with each class having about 35 students. The instructor was further required to comply with the Common Core math schedule. Because students kept asking questions, by the third week of the class, the instructor was over one week behind the Common Core schedule. He told the students they were no longer allowed to ask questions. After two more weeks in which the classes fell further behind, the instructor had a nervous breakdown and took the next 4 months off.

Because the state legislature refuses to fully fund public schools, the school district was unable to find a replacement Algebra II instructor. The series of unqualified instructors who babysat the classes for the next four months knew nothing about Algebra II and were unable to answer the students questions. The original instructor returned in February 2016 but it is clear that these students will not have a fair chance to pass the SBAC math test because they did not receive instruction on the topics that will be on the test. This is not the fault of the math teacher or the school district or the school board. It is the fault of the state legislature which has utterly failed to fully fund our public schools in the manner required by our state constitution. But it will be struggling students who will be unfairly held accountable for the failure of the legislature to fund our schools.

#1.3 The SBAC Test incorrectly assumes that students have had several years to learn the Common Core standards.

Common Core standards begin in Kindergarten and assume a building block approach that carries through high school. This means that our high school students are being forced to learn and be tested on standards that they were never prepared for in either middle school or elementary school.

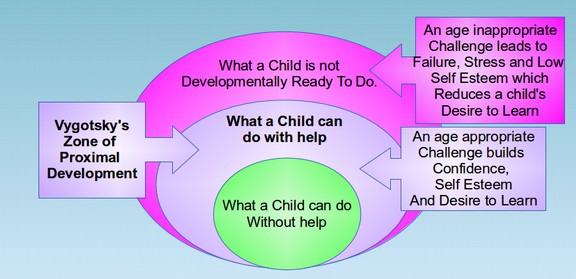

#1.4 The SBAC Test incorrectly assumes that the Common Core standards and Common Core Test questions are age appropriate.

The drafters of the Common Core standards did not include a single elementary school teacher or child development specialist. Instead, the Common Core standards ignored 100 years of research on child development . When the standards were first revealed in March 2010, many early childhood educators and researchers were shocked. "The people who wrote these standards do not appear to have any background in child development or early childhood education," wrote Stephanie Feeney of the University of Hawaii, chair of the Advocacy Committee of the National Association of Early Childhood Teacher Educators. This is why more than 500 of our nation's leading child development specialists signed a letter opposing the Common Core standards. The Joint Statement of Early Childhood Health and Education Professionals on the Common Core Standards Initiative was signed by educators, pediatricians, developmental psychologists, and researchers, including many of the most prominent members of those fields. This statement can be downloaded at the following link: https://coalitiontoprotectourpublicschools.org/phocadownload/joint_statement_on_core_standards.pdf

Their statement reads in part: "We have grave concerns about the core standards for young children…. The proposed standards conflict with research in cognitive science, neuroscience, child development, and early childhood education about how young children learn, what they need to learn, and how best to teach them….

The statement's four main concerns are based on abundant research on child development—facts that all parents and policymakers need to be aware of:

1. The K-3 standards will lead to long hours of direct instruction. This kind of "drill and grill" teaching will push active, play-based learning out of many kindergartens.

2. The standards will intensify the push for more standardized testing, which is highly unreliable for children under age eight.

3. Didactic instruction and testing will crowd out other crucial areas of young children's learning: active, hands-on exploration, and developing social, emotional, problem-solving, and self-regulation skills—all of which are difficult to standardize or measure but are the essential building blocks for academic and social accomplishment and responsible citizenship.

4. There is little evidence that standards for young children lead to later success. The research is inconclusive; many countries with top-performing high-school students provide rich play-based, nonacademic experiences—not standardized instruction—until age six or seven. Our first task as a society is to protect our children. The imposition of these standards endangers them. The Core Standards will cause suffering, not learning, for many, many young children.

The National Association for the Education of Young Children is the foremost professional organization for early education in the U.S. Yet it had no role in the creation of the K-3 Core Standards. The Joint Statement opposing the standards was signed by three past presidents of the NAEYC—David Elkind, Ellen Galinsky, and Lilian Katz—and by Marcy Guddemi, the executive director of the Gesell Institute of Human Development; Dr. Alvin Rosenfeld of Harvard Medical School; Dorothy and Jerome Singer of the Yale University Child Study Center; Professor Howard Gardner of the Harvard Graduate School of Education; and many others.

How the SBAC Test Harms Students

How the SBAC Test Harms Students

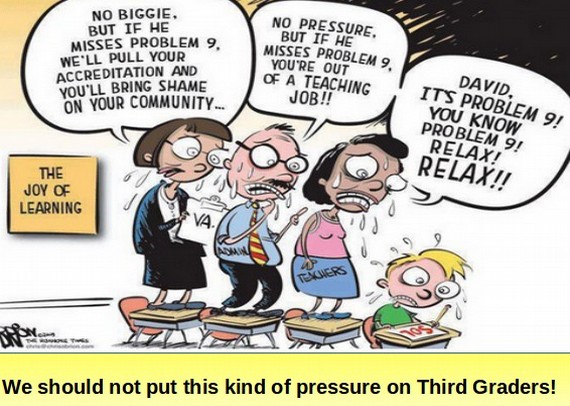

If the problems with the SBAC test were merely limited to the fact that it is not a fair, reliable, valid or accurate measure of whether students are at grade level, one might conclude that the test should be tolerated. But the SBAC test is such a deeply flawed test that it harms students by turning our schools into a toxic test prep pressure cooker where all students learn is to hate school rather than a joyful productive learning environment. By forcing students to attempt tasks that the student is not developmentally ready to perform and then labeling students as failures for being unable to perform these unreasonable tasks, the SBAC test is a form of child abuse.

High Stress Tests Increase Childhood Suicides

As a leader of Opt Out Washington, I have conducted meetings across the state on how and why parents can and should opt their kids out of the unfair SBAC Common Core test. At these meetings, parents often bring their own stories of how the SBAC test has harmed their kids. At a recent Opt Out meeting, a mom spoke tearfully about her Third Grade son who became depressed and attempted to kill himself after failing the Third Grade SBAC Common Core math test.

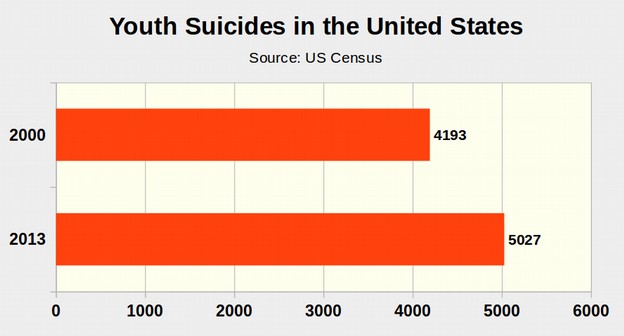

Since the introduction of developmentally inappropriate Common Core standards and unfair Common Core high stakes tests, there has been a 40 percent increase in the suicide rate of elementary school students in the US and a 20 percent increase in the suicide rate of high school students. Specifically, according to the US Census, in 2000, 205 elementary school children ages 5 to 14 committed suicide. In 2013, 286 children committed suicide for an increase of 40%. In 2000, 3,988 young adults ages 15 to 24 committed suicide. In 2013, 4,741 young adults committed suicide for an increase of 19%. In total, for ages 5 through 24, in 2000, before No Child Left Behind, 4193 kids committed suicide. In 2013, the total number of kids who killed themselves was 5,027 kids for an increase of 834 needless deaths per year or a 20% increase.

According to t he Alliance for Childhood : "There is growing evidence that the pressure and anxiety associated with high-stakes testing is unhealthy for children–especially young children–and may undermine the development of positive social relationships and attitudes towards school and learning. … Parents, teachers, school nurses and psychologists, and child psychiatrists report that the stress of high-stakes testing is literally making children sick."

The source of the problem is not federal law. It is that the Washington State Superintendent of Public Instruction has failed to comply with federal law by failing to provide the school district with an assessment system that is fair, reliable, valid and accurate. The good news is that the school district already has an assessment system that is fair, reliable, valid, accurate and nationally recognized in the form of teacher grades.

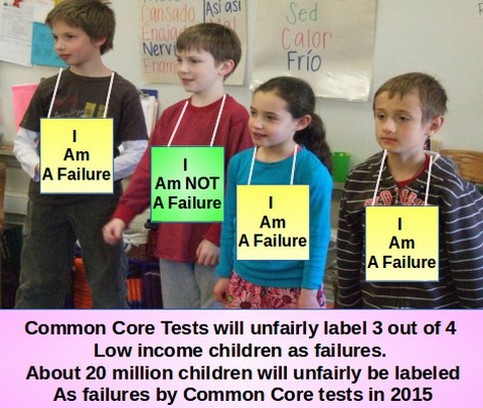

The SBAC Test is Particularly Unfair to Low Income and Minority Students

The SBAC test harms low income and minority students by labeling nearly 75% of them - even students performing at grade level - as failures.

These low income third graders, many of whom already face significant challenges in their home life, tend to internalize the failure to pass the SBAC test. They blame themselves for failing the test. They get depressed and give up on school and give up on life - falsely concluding that they are stupid because they cannot figure out how many boxes of paper clips there are and how many paper clips are in each box.

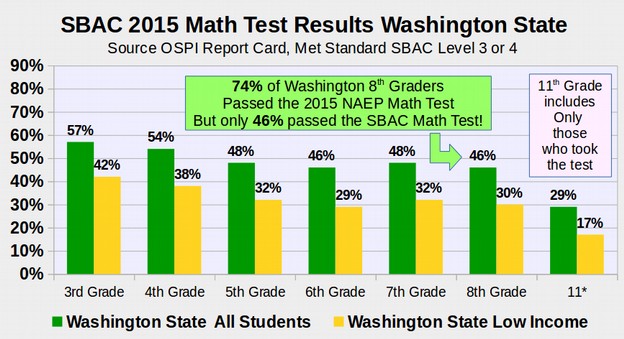

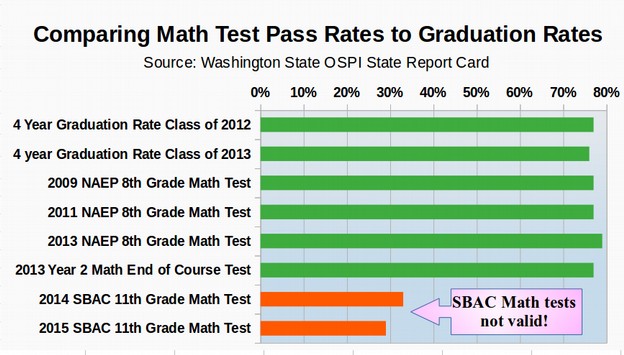

Washington State 8th graders are among the highest scoring 8th graders in the nation and in the world on national and international tests. Yet the unfair and inaccurate SBAC Math Test had the audacity to unfairly label 54% of them as failures!

* Over 60% of 11th graders opted out of the SBAC Math in Washington State. Thus, the number listed is "met standard excluding no scores." Keep in mind that these same Washington students scored second highest in the nation in 2009 on the NAEP 8th Grade Math Test.

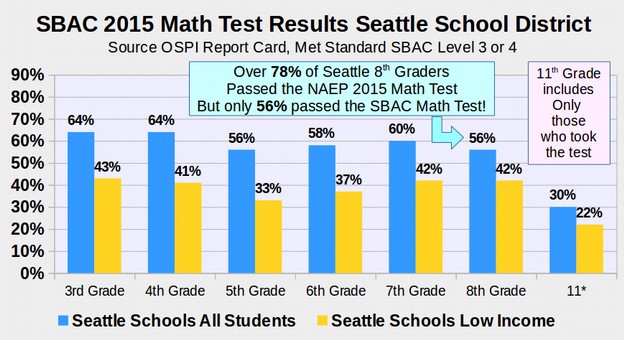

* Over 80% of 11th graders opted out of the SBAC Math Test in the Seattle School District. Thus, the number listed is "met standard excluding no scores." Keep in mind that the Seattle School District is above the Washington State average, far above the national average and one of the highest scoring urban school districts in the nation and in the world.

#2 The SBAC Test is not a Valid Assessment of Student Achievement

Validity refers to whether a test measures what it claims that it measures. Like the SAT test, the SBAC test promoters incorrectly claim that the SBAC test can assess whether students are "college ready." In fact, there is no evidence that the SBAC test - or any bubble test -can reliably determine which students are career and college ready. Instead, the largest study ever conducted on the ability of bubble tests to determine college readiness - involving more than 81,000 students in California - found that the SAT test was no more able to determine college readiness than a coin toss! Instead, the most reliable predictor of college readiness was a student's high school Grade Point Average (GPA) – or the average of the combined grades from 24 teachers who worked with the student during their four years of high school.

http://www.cshe.berkeley.edu/sites/default/files/shared/publications/docs/ROPS.GEISER._SAT_6.13.07.pdf

A study by the Harvard Graduate School of Education also found that high school grades of students in New York were much more accurate in predicting college grades that scores on high stakes tests.

http://projects.iq.harvard.edu/files/eap/files/cuny_fgpa_prediction_8.26.2014_wp.pdf

Another 2014 study found that students who did not submit high stakes test scores performed as well in college as students who did submit high stakes test scores.

http://www.nacacnet.org/research/research-data/nacac-research/Documents/DefiningPromise.pdf

Put in plain English, high stakes bubble tests like the SBAC test are not as reliable or as valid as grades from teachers. Those who claim that the SBAC test can predict college readiness are not telling the truth. If the goal of an assessment system is to determine career and college readiness, then Teacher Grades are the most valid assessment system. However, the Iowa Assessment does include a "college readiness" scale that is matched to the ACT College Readiness test.

#3 The SBAC Test is n ot a Reliable Assessment System

Reliability refers to how repeatable the test is and how well the test questions match other test questions and other performance measures. The SBAC test is radically different from any other previous assessment of student achievement. The SBAC test is a variable item test that gives different questions to different students even in the same class. There are more than 40,000 questions just in the math portion of the SBAC test - making it impossible to tell which students get which questions.

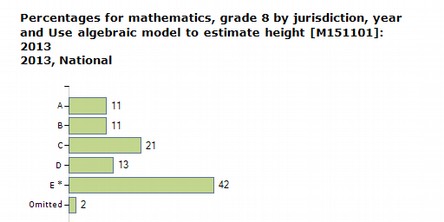

Furthermore, because the SBAC test has been developed in secrecy, no school board members, teachers or parents are able to independently review the reliability, validity or fairness of any particular test questions or any particular test. This utter lack of reliability stands in sharp contrast to the National Assessment of Educational Progress (NAEP) that has published their test questions along with the actual percentage of students that have correctly answered each test question. For example, the following table shows the actual percentage of students in the United States who got the correct answer on an algebra question about determining height from a formula was 42%. This question was therefore rated as a NAEP "medium" difficulty math question.

The lack of reliability of the SBAC test also stands in sharp contrast to the Iowa Assessment which has shown a strong correlation to the NAEP test and to student achievement.

#4 The SBAC Test is not an Accurate Test of Student Achievement

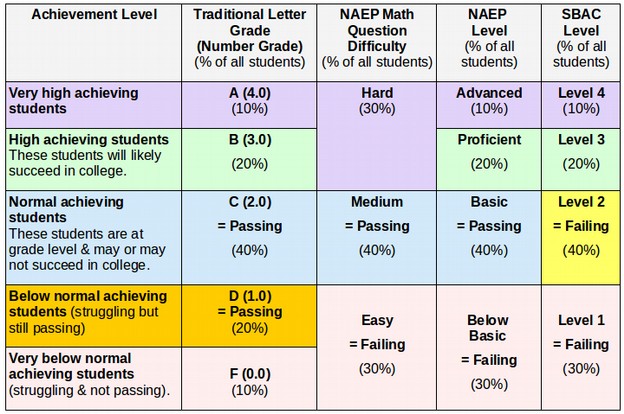

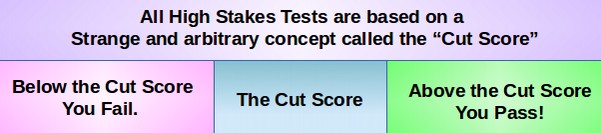

The SBAC test ignores the meanings of the terms Basic, Proficient and "At Grade Level." Since the term "at grade level" is specifically used in the new ESSA, the failure of the SBAC test to relate student achievement to grade level is perhaps its most important shortcoming. Historically, students were graded on a Bell Shaped Curve with the letters A, B, C, D and F. These grades were changed to number grades such as 4.0, 3.0, 2.0 and 1.0. The term "as grade level" means that a student has achieved a letter grade of A, B, or C. While D might be a passing grade, it indicates that the student is struggling and performing below grade level. But C work is at grade level and B work is above grade level.

Comparing Traditional Letter Grades to NAEP and SBAC Levels

While all standardized bubble tests are notorious for discriminating against low income and minority students, the most reliable, valid, transparent and highly researched standardized test is the National Assessment of Student Progress (NAEP) which has been administered and researched for more than 30 years. Most people are familiar with the traditional letter grading system of A, B, C, D and F shown on the table above. Unfortunately, the SBAC test and NAEP tests use different grading systems. It is therefore important to understand how these various grading and ranking systems are related or not related.

You can see from the above table that NAEP Hard Math questions can be answered by A and B students while NAEP Medium Math questions can be answered by C students. Also, the NAEP ranking system made a controversial change to the traditional grading system. NAEP changed a D grade which has historically been a passing grade into a failing grade. This meant that to pass the NAEP test, with a score of Basic, a student needed to be "at grade level" with an average traditional grade of C. This change tripled the percent of students who were labeled as failures from 10% to 30% - without any change in the actual performance of the students.

The SBAC test went even further than the NAEP test. SBAC test designers had the audacity to even declare students who are at grade level and passing with a C grade as failures (SBAC level 2). This increased the percent of students who were labeled as failures from 30% to 70%. This is the reason the SBAC test fails two out of three students who take the test – it is designed to fail even students who are at grade level! This was done by manipulating the cut scores of the test.

In reality, student performance typically occurs along a continuous bell shaped curve. Traditionally, we have set cut scores so that about 10% of these students would get an A, 20% would get a B, 40% would get a C, 20% would get a D and about 10% would get an F and fail the test or fail the course. According to OSPI, the 2015 actual SBAC math test results for spring 2015 failed about 70 % of all students and those who failed were disproportionately students of color, English Language Learners, students with special needs and students living in poverty.

Benefits of the Iowa Assessment over the SBAC Test

1. T he Iowa Assessment uses a Fairer and More Accurate Proficiency Standard

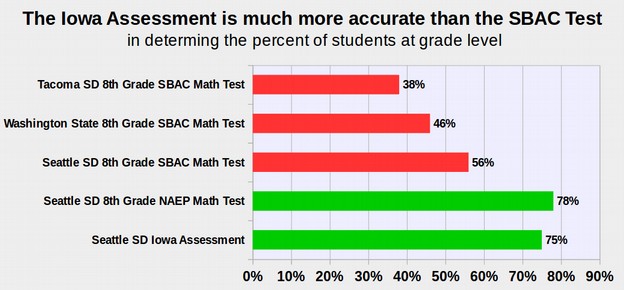

Based on the results from Kentucky and New York, there will not be a significant improvement in SBAC proficiency scores over time. However, if the Seattle School Board replaced the SBAC test with the Iowa Assessment, the proficiency scores would raise dramatically for three reasons. First, the Iowa Assessment is set for a Proficient Cut Score of 60% based on a national sample. Because the State of Washington is about 5% above the national average, this means that 65% of Washington students would be rated as Proficient with the Iowa Assessment. Because Seattle students are about 10% above the Washington State average, about 75% of Seattle students would be rated as Proficient using the Iowa Assessment. This is still slightly less than the 78% of Seattle students rated as Proficient using the NAEP test. But it is a very accurate representation of the percent of students who are "at grade level" which is the federal standard under the new ESSA. This close alignment to the federal standard is what makes the Iowa Assessment a fair, accurate, reliable and valid assessment.

Equally important, all questions have been screen by child development specialists to make sure they are age appropriate. No child will be asked a question that is age inappropriate. The fairness and age appropriateness of test questions is likely one reason that the State of Iowa has the highest graduation rate in the nation. It is because their students are not traumatized by being subjected to unfair and age inappropriate high stakes test questions. Iowa also has much smaller class sizes than Washington.

Seattle School District as an Example of Harm Inflicted on Students by Labeling "At Grade Level" Students as Failures

The four year high school graduation rate for the Seattle School District is about 76%. Because passing Teacher Grades is required to graduate, this high school graduation is a close proxy for Teacher Grades of the percentage of students who are at grade level. Thus, about 76% of all Seattle students have historically been "at grade level." On the 2014 Washington State 8th grade End of Course (EOC) math exam, 84% of Washington state students passed, while in the Seattle School District 87% passed the Math EOC exam. This indicates that the Seattle School District is at or above the Washington State percentage for students who are "at grade level" in the 8th grade. By comparison, in the Tacoma school district, only 41% of 8th Graders passed the Math EOC exam.

While both the Iowa Assessment and the NAEP test estimate that about 78% of Seattle School District students are at grade level, the SBAC test falsely claims that only 56% of Seattle School District students are at grade level. This confirms that the "standard" being measured by SBAC is not the same as the "at grade level" standard being measured by the NAEP math test and being measured by the Iowa Assessment. This is why the SBAC test is not a fair, reliable, valid or accurate assessment. The failure of the SBAC test to accurately measure the actual ability level of Seattle School District students can only partially be attributed to the misuse of the term "proficient." The fact that SBAC is a computer only test and that it gives different test questions to different students are also likely contributing factors to the unfair nature of the SBAC test. The following chart shows that the 11th grade SBAC math test is even less accurate in determining the percent of students at grade level than the 8th grade SBAC test.

Advantages of the Iowa Assessment

The Iowa Assessment was developed more than 70 years ago (in the 1930s), by teachers and child development specialists, before high stakes testing became a $10 billion per year industry and was taken over by Wall Street consultants out to make a quick buck. The Iowa Assessments have evolved numerous times with the most recent changes occurring in 2012 when the Iowa Assessment was aligned with the Common Core standards.

Norm Referenced Assessments versus Criterion Referenced Assessments?

Wall Street marketers claim that the Iowa Assessment system is not as useful a tool to measure "college readiness" as the tests they come up with because the Iowa Assessments are "norm-reference" tests measured against a national sample rather than the Wall Street tests, like SBAC that supposedly are "criterion-referenced" tests that claim to measure how a student performs against "college readiness" standards like the Common Core. The National Assessment of Educational Progress also claims that it is a Criterion Referenced Test. This is a misleading debate because both the SAT and the ACT are college readiness tests that are also norm referenced. Moreover, for more than 20 years, the Iowa Assessment has sought out and used learning standards from around the US to design and construct their test questions. The key thing that administrators and even Wall Street marketers use high stakes assessments for is to compare a state or school district to the national average. It therefore makes sense to use norming as a part of the benchmarking process. Otherwise, you wind up like with SBAC using completely arbitrary passing scores.

Beginning in 2012, the Iowa Assessments aligned their tests to the Common Core Standards. In fact, currently, the Iowa Standards are more aligned to the Common Core Standards than the National Assessment of Educational Progress!

The Iowa Math Assessment tests a broader range of skills than the NAEP math test. In addition, the Iowa Assessments now include a "college ready" score in addition to their "grade level" score based on an alignment study with the NAEP test.

Source: 2012 Iowa Assessment to NAEP Correspondence Study

https://itp.education.uiowa.edu/ia/documents/NCME_naep_2012_Paper.pdf

Diagnostic or Formative versus Summative Assessments?

Wall Street Marketers who push the SBAC test claim that the SBAC test can also be used as a formative pretest. That is because formative assessments are the new buzz word. There is no evidence to support the idea that a national test, that has nothing to do with the classroom or teacher in which the student exist can offer a valid and reliable formative assessment. Instead, formative assessments should be designed by the actual teacher for the actual students and the actual learning that the students will cover during their actual course.

Using the Iowa Assessment as a Pretest

The Iowa Assessments have always been designed to compare the progress of a single student over a period of years compared to the average student who is at grade level. Thus, the stated goal of the Iowa Assessments is more in line with the new ESSA test (to determine whether a student is achieving at grade level) than any other test in the nation. The Iowa Assessment writers specifically caution against using the Assessment scores in isolation but rather than they should be used in combination with teacher observations and other student work (aka teacher grades). However, because the Iowa Assessments using a building block progression to learning and skill building, they can be used a Pretest. And because they are much shorter than the SBAC test, they can be given without disrupting a student's schedule. And they can be scored immediately.

Length of Time Required for the Test

There are several subjects covered by the various Iowa Assessments. These include English, Reading, Writing, Math, Science and a couple other subjects. Unlike the SBAC tests which can take up to 9 hours or more than 4 hours for a single subject like English or Math, the Iowa Assessments are designed to be completed in a single class period. This key feature is important to avoid disrupting the schedule of the student or the schedule of the school. There is no taking the entire month of May off to perform SBAC testing. And as we noted above, they can be scored immediately!

Score Reporting

The Iowa Assessments report scores both in terms of percentiles (where a student stands in relation to the national average) and grade level (where the student stands in relation to being at grade level). This makes the Iowa test far better than the SBAC in complying with federal law to determine whether a student is achieving "at grade level" and what percentage of students are achieving at grade level in a given school, grade or school district.

Age Appropriate Vocabulary and Questions

The Iowa Assessments make a great effort to select "appropriate vocabulary that children are likely to encounter and use in daily activities, both in and out of school." This is in sharp contrast to SBAC and other Wall Street designed tests that have been shown to use vocabulary and questions that are two to three grade levels about the grade level of the student taking the test.

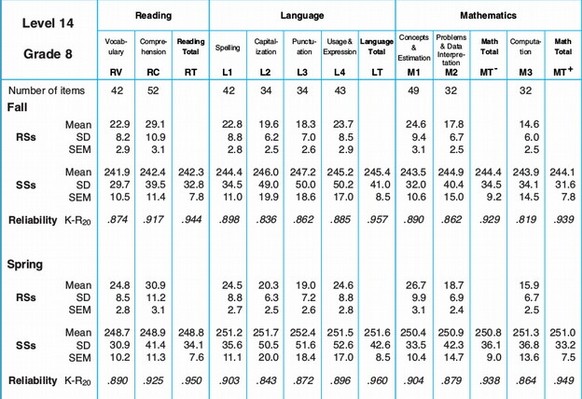

Iowa Assessments Reliability Research

The Iowa Assessment has undergone much more research using actual students in actual schools taking actual tests than any current assessment system other than the NAEP test. In fact, because the NAEP test gives different questions to different students in different states, while the Iowa Assessment gives students the same questions, the Iowa Assessments have even more evidence of reliability and validity than the National Assessment of Educational Progress. The reliability of the Iowa Assessments has always been more than .90 (same as the NAEP test). Below is a table from page 73 of the Reliability statistics for the 2000 National Standardization of the 8th Grade ITBS which was given in the Fall and then replicated in the Spring:

Again, the reliability was very high and the standard error of measurement was very low. This is an example of a highly reliable test with a low level of variability.

Average Level of Difficulty of Questions is .6

This means that 60% of students can answer the average difficulty question. By contrast, the SBAC test is set so that only 30% of students can answer the average difficulty question. On page 90 of the ITBS 2002 Research Report is the following quote: "Careful test construction led to an average national item difficulty of about .60 for spring testing. At the lower grade levels, tests tend to be slightly easier than this; at the upper grade levels, they tend to be slightly harder. In general, tests with average item difficulty of about .60 will have nearly optimal internal consistency-reliability coefficients. Nearly all tests and all levels have some items with difficulties above .8 as well as

some items below .3." The tables then confirmed that the level of difficulty of the K and First Grade tests was .7. Second Grade tests was .65. Third Grade on was set for .6. This fact is likely why the National Assessment of Educational Progress level of difficulty is set for .6 and why the GED test (prior to 2014) was also set for .6.

8th grade Iowa Assessment is partially predictive of HS GPA and College GPA.

In analyzing the question of whether the Iowa Assessment could predict high school or college GPA, the researchers concluded:

"Course grades in the middle school years tended to be better predictors of high school performance than test scores, suggesting unique factors influence grades." ITBS 2002 Research Report page 47

Computer versus Paper and Pencil Tests?

Beginning in 2013, the Iowa Assessments come in both a computer and a paper version. There is a slight difference between the two tests because the computer version of the Iowa Assessments includes a few experimental "computer adaptive" questions. In 2014, researchers released the first comparative study of the paper versus the computer version of the Iowa Assessments.

https://itp.education.uiowa.edu/ia/documents/Iowa_Assessments_Comparability_Study.pdf

3,600 students from 20 school districts were randomly assigned (1) paper-and-pencil (P&P) version first and online version second or (2) the online version first and P&P version second. Unfortunately only 10% of the sample was from high poverty school districts (which are the ones that will have the most difference between computer and paper tests so we cannot be entirely confident in the results of this study). Surprisingly, the correlations between the test versions was very high ranging from .7 to .9. T he conclusion was that the online and paper tests yielded nearly identical results.

However, we would still urge caution in using the computer version of the Iowa Assessment for two reasons. First, the study did not include a representative sample of low income students. It is known that lower income students are less likely to possess computers at home and therefore are less likely to possess computer key boarding skills.

Second, young children before the 6th grade are also likely to possess poor computer skills and poor key boarding skills. We would therefore recommend not using the computer version of the Iowa Assessment before students are in the 6th Grade.

Finally, even though the Iowa Assessments have made a great effort to make their tests age appropriate, young children below the Fifth Grade often lack emotional regulation skills and may freeze during a high stakes test. We therefore recommend that high stakes tests not be given to students before the 5th Grade and that Teacher Grades, projects or other methods be used to determine whether younger students are at grade level.

As noted earlier, the new ESSA requires that an assessment system have four essential characteristics. These are fairness, reliability, validity and the ability to accurately assess whether or not any given student is achieving "at grade level." In addition, we think it is important to also look at whether the test comes in a paper as well as a computer version and whether the test is age appropriate for younger students and whether the test questions have an appropriate level of difficulty for the average student. Given the current focus on "college readiness" we will also include whether there is any evidence that a particular test can predict college readiness. Finally, there is the legal question of whether an assessment system is already approved by OSPI and therefore can be used to meet federal reporting requirements under ESSA.

We therefore present this Table as a means of comparing various test options.

| TEST OPTIONS | Teacher Grades | Iowa Assessments | NAEP | SBAC |

| Fair | YES | YES | YES | NO |

| Reliable | YES | YES | YES | NO |

| Valid | YES | YES | YES | NO |

| Accurate | YES | YES | YES | NO |

| Aligned to Standards | YES | YES | ?? | ?? |

| Age Appropriate | YES | YES | YES | NO |

| Objective | ??? | YES | YES | YES |

| Paper Version | YES | YES | YES | NO |

| Appropriate Difficulty | YES | YES | YES | NO |

| Measures College Readiness | YES | Claims Yes Really NO | Claims Yes Really NO | Claims Yes Really NO |

| Time to complete | 1 Hour | 3 Hours | 3 Hours | 9 Hours |

| Feedback Report | 1 Day | 4 Days | 6 months | 4 months |

| Approved by OSPI | YES | ??? | NO | YES |

An Example of Why Common Core Standards and the SBAC test are not Age Appropriate

It is not merely the SBAC test that must be replaced, it is also the Common Core standards. Here is an example of how terrible these standards are:

According to t he Alliance for Childhood : "There is growing evidence that the pressure and anxiety associated with high-stakes testing is unhealthy for children–especially young children–and may undermine the development of positive social relationships and attitudes towards school and learning. … Parents, teachers, school nurses and psychologists, and child psychiatrists report that the stress of high-stakes testing is literally making children sick."

Here is how such age inappropriate questions and tests harm young children:

A parent writes: "Recently, my 10-year-old daughter asked me what it would take for me to let her stay home from school forever. .. Not tomorrow. Not next week. Forever. She said: 'I'm too stupid to do that math.' Your child is broken in spirit when they have lost their confidence and internalized words like stupid. That damage is not erased easily."

Are You As Smart as a Common Core Second Grader?

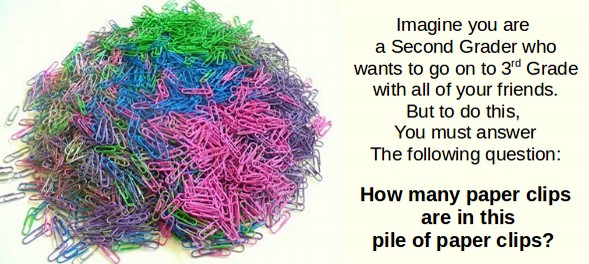

Here is an example of the kind of ridiculous test question s this Third Grader was expected to answer. (note that this question was taken from the nation's most popular Second Grade Common Core Math Book, called Math Expressions). According to the publisher, Houghton Mifflin Harcourt , " Math Expressions Common Core focuses on the priority core concepts at each grade level, identified by the Common Core State Standards, to build in-depth understanding of major mathematical ideas. "

http://www.oswaltacademy.org/ourpages/auto/2011/4/27/44055921/Homework_Remembering_SE_Grade_2%20copy.pdf

In Unit 6, Lesson 5, Problem 4 is the following question for Second Graders:

"Brian has some boxes of paper clips. Some boxes hold 10 clips and some boxes hold 100. He has some paper clips left over. He has three more boxes with 100 paper clips than he has boxes with 10 paper clips. He has two fewer paper clips left over than he has numbers of boxes with 100 paper clips. What number of paper clips could he have?"

Remember that Second Graders are small children. See class below .

The Paper Clip question requires several levels of abstract reasoning. First, the child must imagine an unknown number of boxes that have ten paper clips in them. This number could be any number between one and one million (even though many Second Graders have no experience with numbers greater than 100). We will call this abstract number Z.

Second, the child has to also imagine an unknown number of boxes that have 100 paper clips in them. This number also can be between one and one million. We will call this abstract number Y.

Third, the child has to imagine an unknown number of loose paper clips that are not in either any of the boxes of ten paper clips or the boxes of 100 paper clips. The number of loose paper clips can also be any number between one and one million. We will call this abstract number X.

The, the child has to consider the relationships between these three imaginary numbers. These relationships are are really algebraic equations such as:

X (the loose paper clips) = Y (the number boxes of 100 paper clips) minus two.

Another equation is Y (the number of boxes of 100 paper clips) = Z (the number of boxes with 10 paper clips) plus 3.

Because one cannot solve for three unknowns with only two equations, there is also a hidden equation not even mentioned in the problem. The hidden equation is that X, Y and Z must all be positive numbers. Therefore if Z = 1, Y must equal 4. If Z= 2, then Y must equal 5. If Z = 3, then Y must = 6. This presents us with an infinite series of correct answers. The idea of an infinite number of correct answers is also an abstract concept well beyond the ability of most Second Graders. But even if the child is able to solve each of these equations, the child needs to add all three answers together as the question is not asking for X, Y or Z but yet a fourth algebraic equation: A (answer) = X + Y+ Z

Each of these steps requires abstract reasoning far beyond the ability of most Second Graders and in fact, beyond the ability of most adults and most state legislators! Even knowing all of these clues, which a small child is not likely to understand, most adults and most state legislators still could not solve this problem.

Have you figured it out yet? Do not feel bad if you hav e trouble answering this math puzzle. We have given this problem to state legislators who were unable to solve it. The fact that state legislators are requiring Second Graders to solve math puzzles that legislators themselves cannot solve is an indication of how inappropriate the Common Core tests are for very young children.

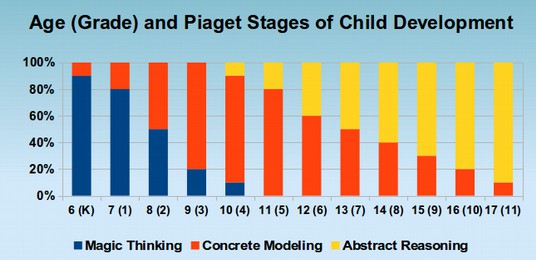

The authors of Common Core, and the SBAC test are apparently not aware that Second Graders and Third Graders are still in the concrete thinking stage of brain development and are not capable of abstract reasoning. Children are not capable of Abstract Reasoning until about the Sixth to Eight Grade. So this question is at least four to six years beyond the brain development of a Second Grader.

What I will do as Superintendent to Protect Washington Students from the Toxic SBAC test

T he new ESSA states on page 24 that its purpose is to determine " whether the student is performing at grade level ." Because the SBAC test does not provide accurate information about whether a student i s achieving at grade level, the SBAC test does not comply with the ESSA. As superintendent, I will therefore end the SBAC test not just as a graduation requirement but completely in all grades - and replace it with assessment option s such as Teacher Grades and/or the Iowa Assessment that do accurately measure whether a student is achieving at grade level. As always, we look forward to your questions and comments!

Regards,

David Spring M. ED.

Candidate for Superintendent of Public Instruction

Spring for Better Schools.org

![]()

Source: https://springforbetterschools.org/7-end-high-stakes-testing/why-i-oppose-the-toxic-sbac-test

0 Response to "Does Sbac Give Easy Test to Dumb People"

Post a Comment